Big Data Engineering for Identity Verification Companies

Problems You Shouldn’t Be Solving Alone

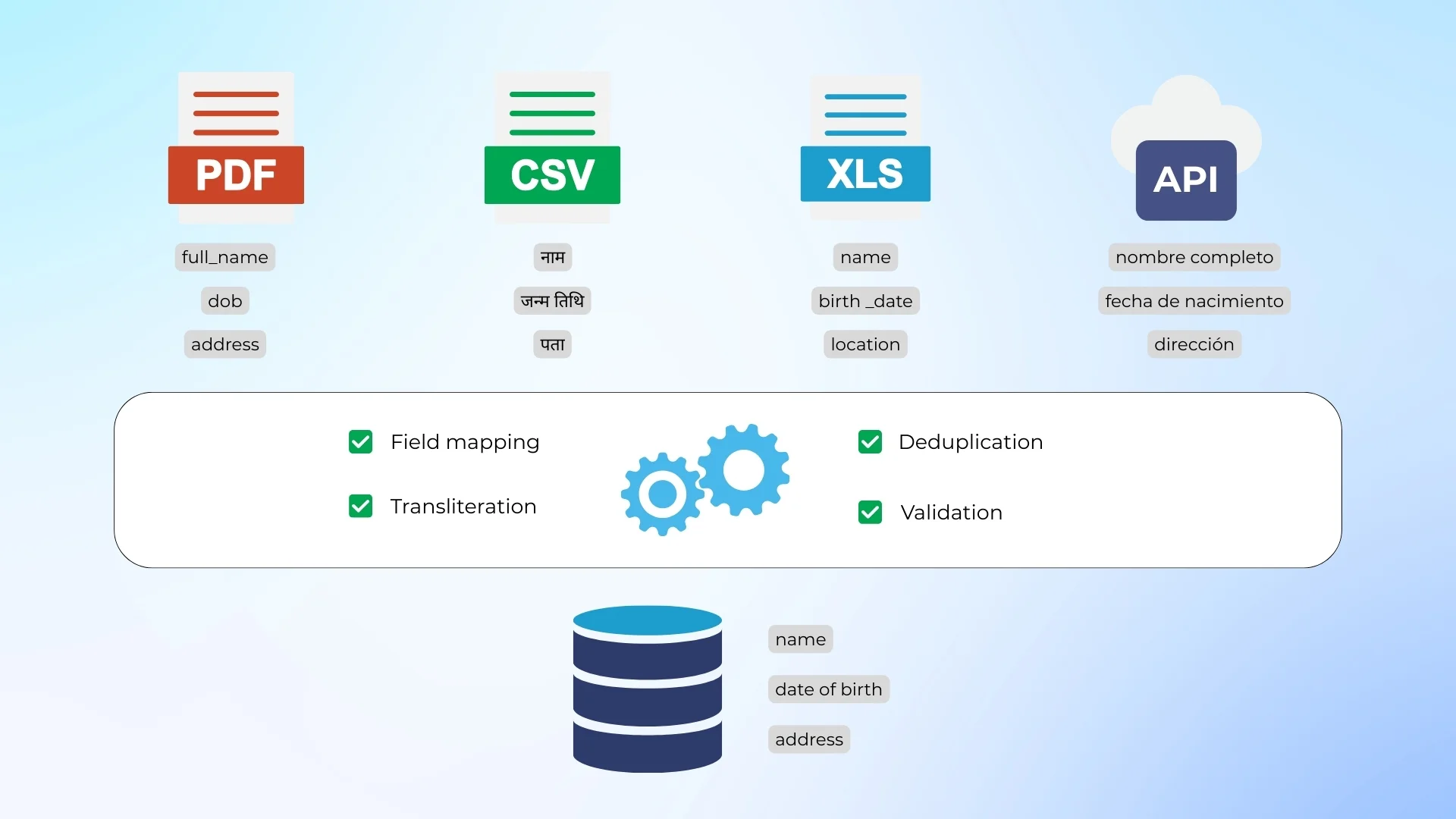

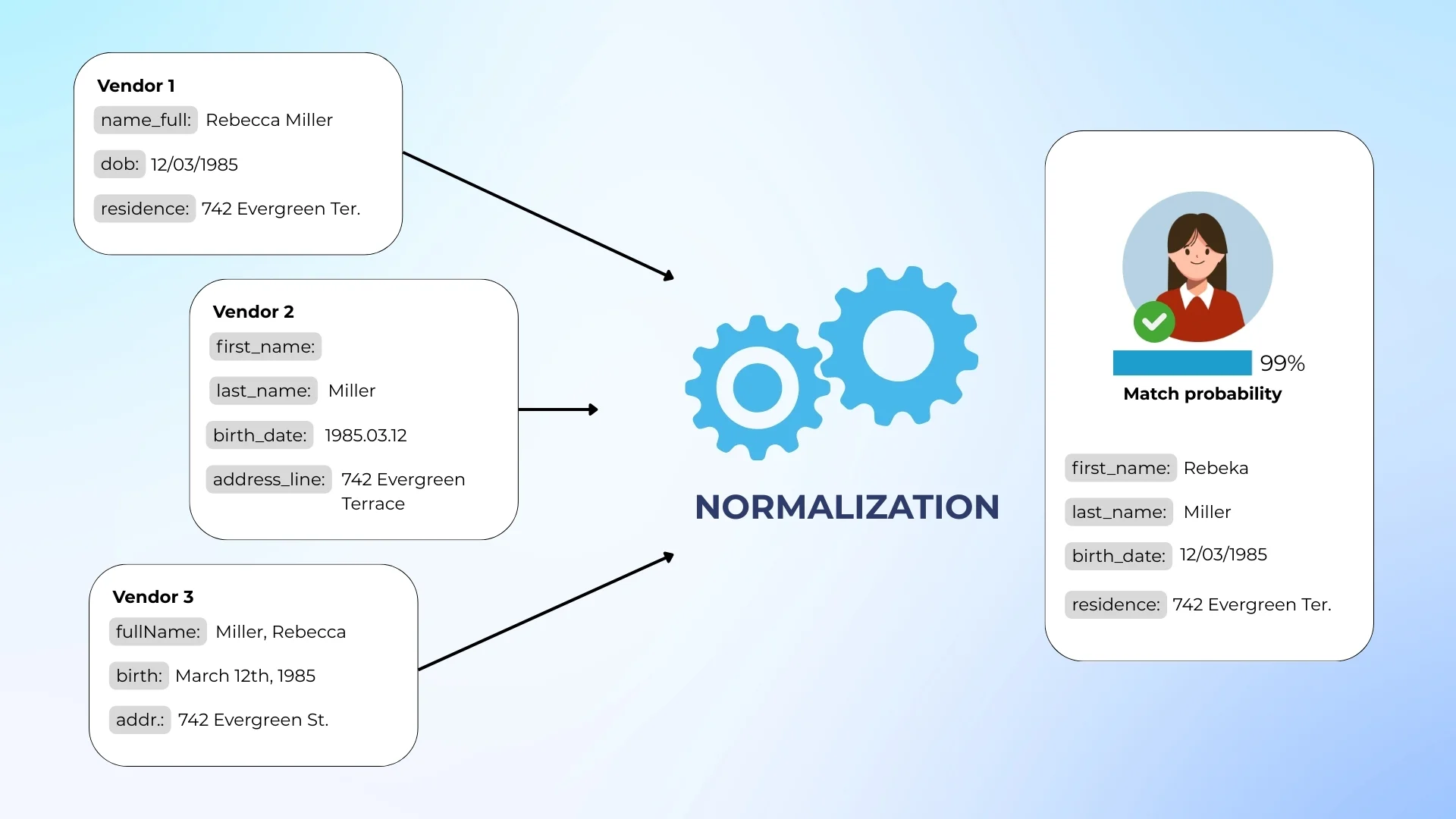

You’re ingesting from dozens of vendors. Each has unique formats and field names. This causes mismatches, failed joins, and constant rework.

- Our solution: We normalize, validate, and align data across all sources.

Example: Integration of 36+ regional registries into a single 1B+ record dataset (India Voter Project).

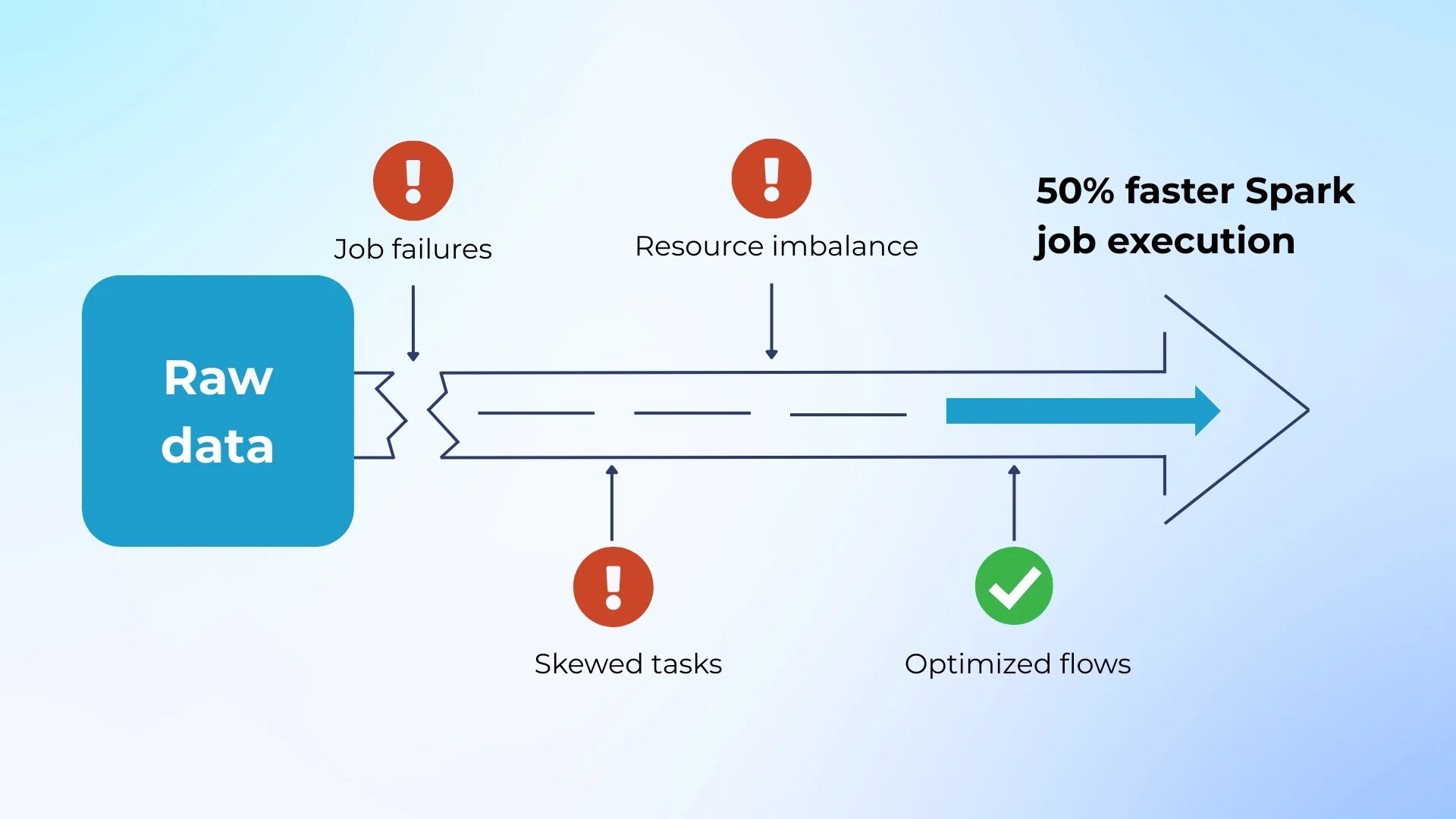

Slow batch jobs, skewed Spark workloads, unbalanced resource allocation, and frequent failures stall your data delivery.

- Our solution: We apply salting, broadcast joins, and resource-aware orchestration (Airflow).

Example: 50%+ faster Spark job execution and stable workflows that scale with volume.

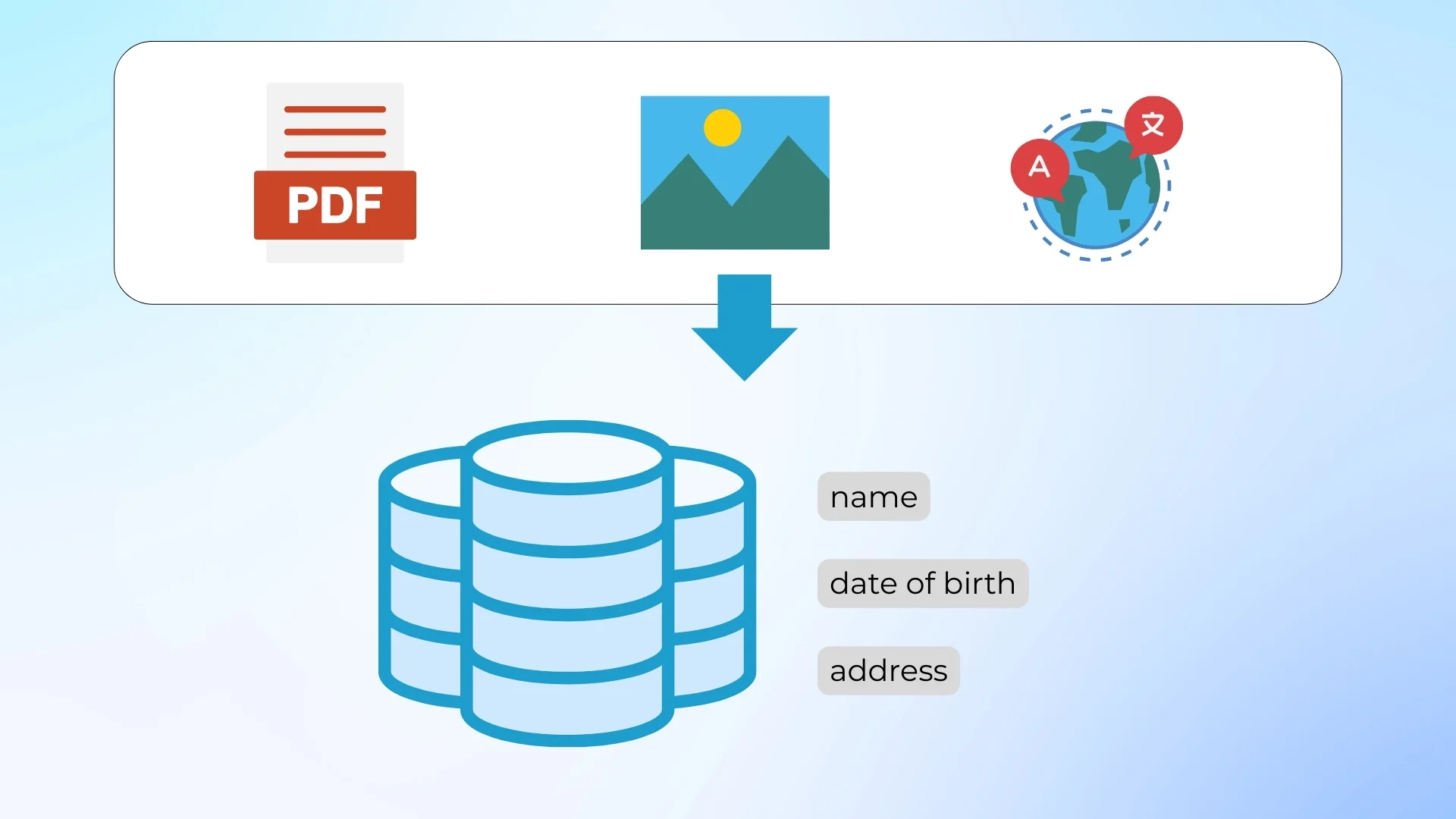

Your data includes PDFs, images, or multilingual inputs. None of which play well with standard parsers.

- Our solution: Custom OCR pipelines, ML-based transliteration, and AI-powered data parsing.

Example: Parsed 18M+ PDFs and extracted 1.2M+ data points in 3 days at $0.0007 per file.

You can’t hire fast enough, and your best engineers are stuck fixing glue code. Hiring is slow, and coordination eats time.

- Our solution: A ready-to-go self-managed team (Scala, Python, DevOps, QA) integrated into your stack in 2–4 weeks.

Example: Full data pipeline ownership + zero hiring, HR, or delivery management overhead.

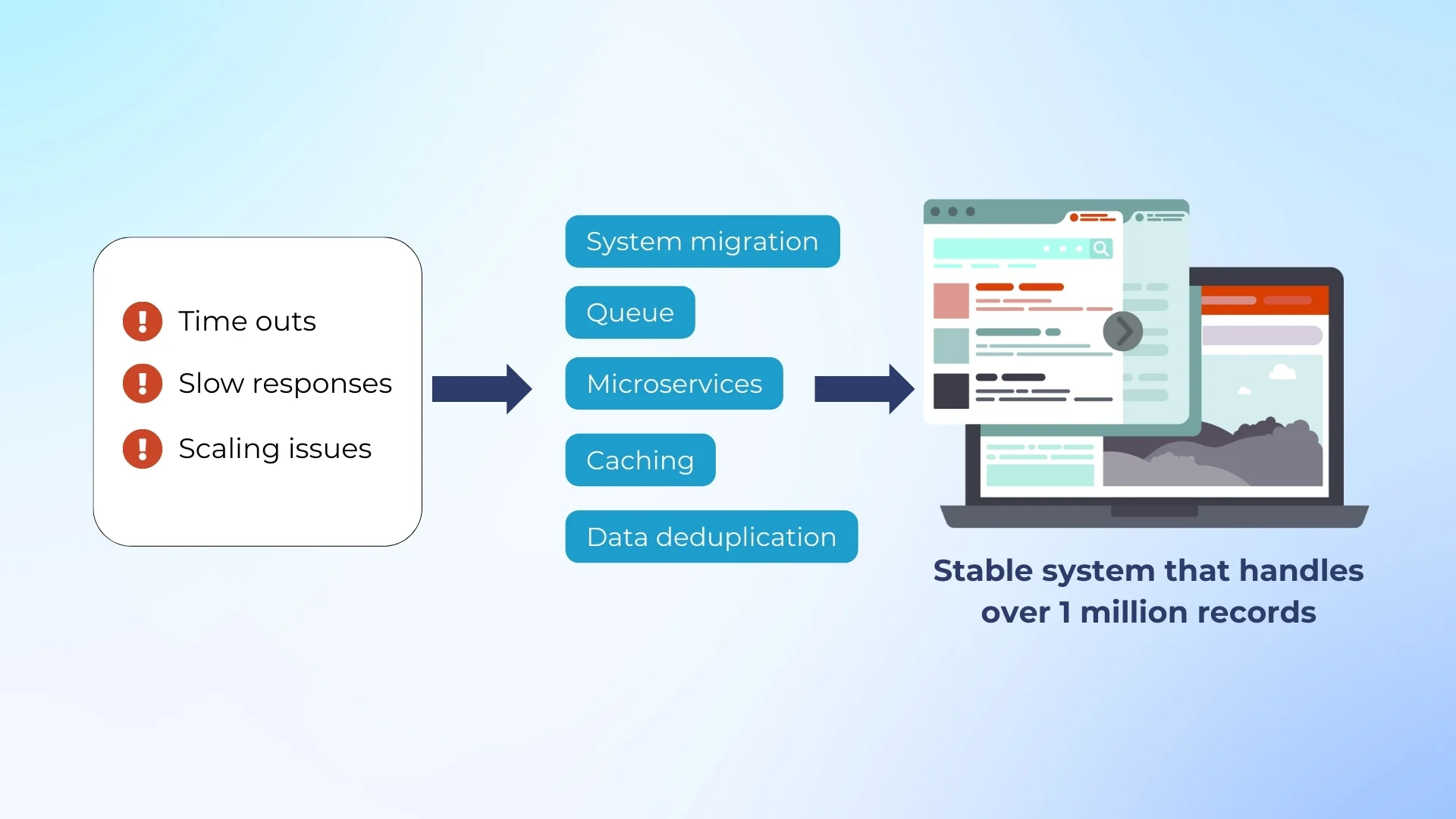

You rely on APIs or microservices to expose new data, but these systems often choke under batch loads or fail to scale.

- Our solution: We build ingestion-focused services: queue-driven, fault-tolerant, cache-optimized microservices for high-throughput data ops.

Example: Real-time ingestion for 1M+ daily records with sub-second API response times and zero downtime under load.

When multiple vendors provide overlapping data, you end up with partial records, mismatched names, or duplicated entries. That breaks identity graphs and pollutes search results.

- Our solution: We implement rule-based and probabilistic entity resolution models—configured per dataset—to unify and deduplicate entities across sources.

Example: Resolved 4.5M+ person profiles with 85%+ match accuracy, integrating data from several databases into single entities.

What We Build and Deliver

ETL for ID Verification

We build full-cycle ETL pipelines that power identity verification. Our team collects raw data from any public source: APIs, PDFs, third-party vendors, or websites. We clean, normalize, validate, and enrich that data to standardize formats and eliminate duplicates. During transformation, we apply match logic to unify records tied to the same person, even if fields are inconsistent or partially missing. The result: a stream of verified, deduplicated profiles ready for storage, real-time decisioning, or compliance checks.

API Development

We build APIs that connect your platform to external identity data. Whether you're pulling user data from third-party providers or pushing verified profiles to client systems, our APIs act as a single, secure access point. We handle the complexity behind the scenes: schema normalization, error handling, throttling, and fallback strategies. You get one clean interface, regardless of how many vendors or regions you work with. Everything is built to scale, with logging, monitoring, and permission controls baked in. That means faster integration cycles, lower maintenance costs, and better control over the entire verification flow.

Data Pipeline Optimization

Identity verification pipelines often suffer from slow processing, low match rates, and system bottlenecks. We help you fix that. Our team audits your entire pipeline—from data ingestion to final delivery—to identify and resolve inefficiencies. We optimize match logic, reduce processing time, and streamline how data moves between components. That means faster user onboarding, more accurate identity resolution, and fewer failures in high-volume scenarios. You get a system that runs faster, costs less, and scales with your business.

Third-party Data Integration

You want to enrich your identity verification process with external data. But each new source adds complexity. One API returns XML, another delivers JSON with missing fields. Some change without notice. We build robust integrations with public watchlists, national ID registries, and private data providers, so your team doesn’t have to. We standardize incoming data, manage vendor-specific quirks, and ensure it flows smoothly into your pipeline.

Back-end Development

Need custom tools to support your verification workflows? We can build them. From internal systems for case review and manual verification to APIs that serve verified user profiles to your clients, we handle it all. Our engineers develop microservices, queue processors, webhook handlers, monitoring modules, and admin dashboards that integrate directly into your identity stack. Whether you’re scaling up your fraud detection logic, need secure data access layers, or want to modernize legacy components, we bring the backend expertise to get it done.

Entity Resolution

When identity data comes from multiple sources, the same person often shows up under different names, formats, or partial records. We build systems that compare, match, and unify records across fragmented datasets using advanced logic, including fuzzy matching, rule-based scoring, and probabilistic methods. Whether it’s joining government data with customer onboarding info or matching across watchlists and user-submitted forms, we help you tell whether two records point to the same person—or not. In the end, you get cleaner profiles, more accurate decisions, and reduced risk of duplicate or missed matches in your verification flow.

How You Can How We Work: Intsurfing Collaboration Models

Why Choose Intsurfing for Your ID Verification Platform

10+ Years of Experience

Our team has solved tough problems: entity resolution across multilingual datasets, parsing millions of documents with AI, and building pipelines that scale across regions. We know what it takes to get from messy input to clean output.

Specialized in High-Volume Data

We design systems that handle millions of identity records for real-time fraud detection, large-scale onboarding, or watchlist screening. Our pipelines are optimized for speed, memory usage, and parallel processing for consistent performance under load.

Flexible Collaboration Models

You can work with us the way that fits you best. Need data? We’ll deliver it. Need a team? We’ll assemble a managed team that works like part of your company, but no hiring and management on your side.